High Available Kubernetes control plane install using Ansible

Installing a High available Kubernetes cluster has two different approaches and also for a high tolerant cluster it is recommended to have a minimum of 3 or 5 master nodes( ideally we should have 51% of total nodes should be available at once), in any circumstance if one of the master nodes goes down we can still have 2 more master nodes in place to handle the requests.

Example:

1. Two master nodes and if one goes down we have 1 master node to serve the traffic.

in this scenario, we are left with 50% availability of total.

2, Three master nodes, and if one goes down in unplanned situations.

In this scenario, we are left with 2/3=66% availability.

Two available approaches for the high available cluster are:

1. With stacked control plane nodes. This approach requires less infrastructure. The etcd members and control plane nodes are co-located.

[kubeadmin@ansible-master k8s-HA-ansible-install]$ ansible-playbook -i inventory k8s-controlplane-join.yaml --ask-become-pass

BECOME password:

PLAY [master1] *************************************************************************************************************************

changed: [master1]

TASK [set join command fact] ***********************************************************************************************************

TASK [generate the control plane cert key] *********************************************************************************************

changed: [master1]

TASK [register the cert key] ***********************************************************************************************************

ok: [master1]

PLAY [master2] *************************************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************************************

ok: [master2]

TASK [join control-plane nodes] ********************************************************************************************************

changed: [master2]

TASK [debug] ***************************************************************************************************************************

ok: [master2] => {

"msg": {

"changed": true,

"cmd": "kubeadm join 192.168.166.9:6443 --token qzp6f7.mjzjwfc5tyx7vmkz --discovery-token-ca-cert-hash sha256:787bcf56aed6588bf052e8c3be0453fa4fe3f6cd06249f10669364d4940cd97d --control-plane --certificate-key 85c15dd88a157a03e1f873244a9fdc58d27a2efff84ade62ec21529375119188",

"delta": "0:01:17.932220",

"end": "2020-12-02 14:10:47.127348",

"failed": false,

"rc": 0,

"start": "2020-12-02 14:09:29.195128",

"stderr": "\t[WARNING IsDockerSystemdCheck]: detected \"cgroupfs\" as the Docker cgroup driver. The recommended driver is \"systemd\". Please follow the guide at https://kubernetes.io/docs/setup/cri/",

"stderr_lines": [

"\t[WARNING IsDockerSystemdCheck]: detected \"cgroupfs\" as the Docker cgroup driver. The recommended driver is \"systemd\". Please follow the guide at https://kubernetes.io/docs/setup/cri/"

],

"stdout": "[preflight] Running pre-flight checks\n[preflight] Reading configuration from the cluster...\n[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'\n[preflight] Running pre-flight checks before initializing the new control plane instance\n[preflight] Pulling images required for setting up a Kubernetes cluster\n[preflight] This might take a minute or two, depending on the speed of your internet connection\n[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'\n[download-certs] Downloading the certificates in Secret \"kubeadm-certs\" in the \"kube-system\" Namespace\n[certs] Using certificateDir folder \"/etc/kubernetes/pki\"\n[certs] Generating \"apiserver-etcd-client\" certificate and key\n[certs] Generating \"etcd/server\" certificate and key\n[certs] etcd/server serving cert is signed for DNS names [localhost master2] and IPs [172.16.0.11 127.0.0.1 ::1]\n[certs] Generating \"etcd/peer\" certificate and key\n[certs] etcd/peer serving cert is signed for DNS names [localhost master2] and IPs [172.16.0.11 127.0.0.1 ::1]\n[certs] Generating \"etcd/healthcheck-client\" certificate and key\n[certs] Generating \"apiserver\" certificate and key\n[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master2] and IPs [172.16.0.1 172.16.0.11 192.168.166.9]\n[certs] Generating \"apiserver-kubelet-client\" certificate and key\n[certs] Generating \"front-proxy-client\" certificate and key\n[certs] Valid certificates and keys now exist in \"/etc/kubernetes/pki\"\n[certs] Using the existing \"sa\" key\n[kubeconfig] Generating kubeconfig files\n[kubeconfig] Using kubeconfig folder \"/etc/kubernetes\"\n[kubeconfig] Writing \"admin.conf\" kubeconfig file\n[kubeconfig] Writing \"controller-manager.conf\" kubeconfig file\n[kubeconfig] Writing \"scheduler.conf\" kubeconfig file\n[control-plane] Using manifest folder \"/etc/kubernetes/manifests\"\n[control-plane] Creating static Pod manifest for \"kube-apiserver\"\n[control-plane] Creating static Pod manifest for \"kube-controller-manager\"\n[control-plane] Creating static Pod manifest for \"kube-scheduler\"\n[check-etcd] Checking that the etcd cluster is healthy\n[kubelet-start] Writing kubelet configuration to file \"/var/lib/kubelet/config.yaml\"\n[kubelet-start] Writing kubelet environment file with flags to file \"/var/lib/kubelet/kubeadm-flags.env\"\n[kubelet-start] Starting the kubelet\n[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...\n[etcd] Announced new etcd member joining to the existing etcd cluster\n[etcd] Creating static Pod manifest for \"etcd\"\n[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s\n[upload-config] Storing the configuration used in ConfigMap \"kubeadm-config\" in the \"kube-system\" Namespace\n[mark-control-plane] Marking the node master2 as control-plane by adding the label \"node-role.kubernetes.io/master=''\"\n[mark-control-plane] Marking the node master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]\n\nThis node has joined the cluster and a new control plane instance was created:\n\n* Certificate signing request was sent to apiserver and approval was received.\n* The Kubelet was informed of the new secure connection details.\n* Control plane (master) label and taint were applied to the new node.\n* The Kubernetes control plane instances scaled up.\n* A new etcd member was added to the local/stacked etcd cluster.\n\nTo start administering your cluster from this node, you need to run the following as a regular user:\n\n\tmkdir -p $HOME/.kube\n\tsudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config\n\tsudo chown $(id -u):$(id -g) $HOME/.kube/config\n\nRun 'kubectl get nodes' to see this node join the cluster.",

"stdout_lines": [

"[preflight] Running pre-flight checks",

"[preflight] Reading configuration from the cluster...",

"[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'",

"[preflight] Running pre-flight checks before initializing the new control plane instance",

"[preflight] Pulling images required for setting up a Kubernetes cluster",

"[preflight] This might take a minute or two, depending on the speed of your internet connection",

"[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'",

"[download-certs] Downloading the certificates in Secret \"kubeadm-certs\" in the \"kube-system\" Namespace",

"[certs] Using certificateDir folder \"/etc/kubernetes/pki\"",

"[certs] Generating \"apiserver-etcd-client\" certificate and key",

"[certs] Generating \"etcd/server\" certificate and key",

"[certs] etcd/server serving cert is signed for DNS names [localhost master2] and IPs [172.16.0.11 127.0.0.1 ::1]",

"[certs] Generating \"etcd/peer\" certificate and key",

"[certs] etcd/peer serving cert is signed for DNS names [localhost master2] and IPs [172.16.0.11 127.0.0.1 ::1]",

"[certs] Generating \"etcd/healthcheck-client\" certificate and key",

"[certs] Generating \"apiserver\" certificate and key",

"[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master2] and IPs [172.16.0.1 172.16.0.11 192.168.166.9]",

"[certs] Generating \"apiserver-kubelet-client\" certificate and key",

"[certs] Generating \"front-proxy-client\" certificate and key",

"[certs] Valid certificates and keys now exist in \"/etc/kubernetes/pki\"",

"[certs] Using the existing \"sa\" key",

"[kubeconfig] Generating kubeconfig files",

"[kubeconfig] Using kubeconfig folder \"/etc/kubernetes\"",

"[kubeconfig] Writing \"admin.conf\" kubeconfig file",

"[kubeconfig] Writing \"controller-manager.conf\" kubeconfig file",

"[kubeconfig] Writing \"scheduler.conf\" kubeconfig file",

"[control-plane] Using manifest folder \"/etc/kubernetes/manifests\"",

"[control-plane] Creating static Pod manifest for \"kube-apiserver\"",

"[control-plane] Creating static Pod manifest for \"kube-controller-manager\"",

"[control-plane] Creating static Pod manifest for \"kube-scheduler\"",

"[check-etcd] Checking that the etcd cluster is healthy",

"[kubelet-start] Writing kubelet configuration to file \"/var/lib/kubelet/config.yaml\"",

"[kubelet-start] Writing kubelet environment file with flags to file \"/var/lib/kubelet/kubeadm-flags.env\"",

"[kubelet-start] Starting the kubelet",

"[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...",

"[etcd] Announced new etcd member joining to the existing etcd cluster",

"[etcd] Creating static Pod manifest for \"etcd\"",

"[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s",

"[upload-config] Storing the configuration used in ConfigMap \"kubeadm-config\" in the \"kube-system\" Namespace",

"[mark-control-plane] Marking the node master2 as control-plane by adding the label \"node-role.kubernetes.io/master=''\"",

"[mark-control-plane] Marking the node master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]",

"",

"This node has joined the cluster and a new control plane instance was created:",

"",

"* Certificate signing request was sent to apiserver and approval was received.",

"* The Kubelet was informed of the new secure connection details.",

"* Control plane (master) label and taint were applied to the new node.",

"* The Kubernetes control plane instances scaled up.",

"* A new etcd member was added to the local/stacked etcd cluster.",

"",

"To start administering your cluster from this node, you need to run the following as a regular user:",

"",

"\tmkdir -p $HOME/.kube",

"\tsudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config",

"\tsudo chown $(id -u):$(id -g) $HOME/.kube/config",

"",

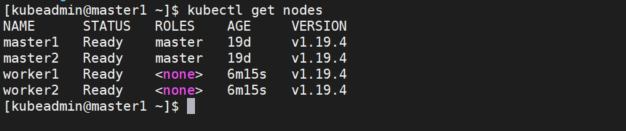

"Run 'kubectl get nodes' to see this node join the cluster."

]

}

}

PLAY RECAP *****************************************************************************************************************************

master1 : ok=4 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Comments

Post a Comment