Infrastructure Setup with VirtualBox Software

Step1: Install VirtualBox software from virtualbox

Step2:

Creating a Base image and will make a linked clone for all the 5 VMs(Ansible-controller, LB,Master1,Master2,Worker1,Worker2).

Download the CentOS images from https://www.osboxes.org/centos/

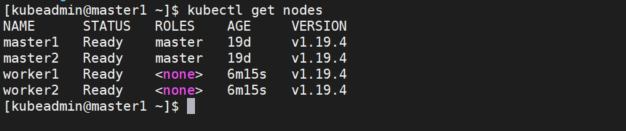

The final output looks like:

Before we start the Ansible to control the master-worker machines. make a note of the IPv4 address of all the machines.

Ansible-master: 192.168.166.12

master1: 192.168.166.10

master2: 192.168.166.11

worker1: 192.168.166.7

worker2: 192.168.166.8

haproxy: 192.168.166.9

$ login to Ansible-master

$ sudo yum update && sudo yum install -y epel-release

$ sudo yum install -y ansible

$ ansible --version

.

Default Inventory check: cat /etc/ansible/ansible-cfg | grep -i inventory (or)

$ ansible-config view

Basic Server setup:

1. change the hostname

$ sudo su -

$ # Execute the below snippet

cat <<EOF> /etc/hostname

ansible-master

EOF

2. Add the ansible-targets to hosts file

$ #Execute the below snippet

cat <<EOF>> /etc/hosts

192.168.166.10 master1

192.168.166.11 master2

192.168.166.7 worker1

192.168.166.8 worker2

192.168.166.9 haproxy

EOF

3. Generate RSA public and private keys and copy the Public key to "authorized" on all the ansible targets.

$ #copy the snippet an execute on ansible-master # replace with your hostnames

servers="haproxy master1 master2 worker1 worker2"

ssh-keygen -t rsa -f ~/.ssh/id_rsa -N '' <<< y

for n in $servers

do

ssh-copy-id -i ~/.ssh/id_rsa.pub $n

done

3. Create a working directory for k8s.

$ mkdir -p ~/k8s/playbooks && cd ~/k8s/playbooks

$ # Execute the following snippet under workdir

# replace ansible_host with your IP address or FQDN

cat <<EOF> inventory

haproxy ansible_host=192.168.166.9 ansible_username=kubeadmin ansible_ssh_private_key_file=/home/kubeadmin/.ssh/id_rsa

master1 ansible_host=192.168.166.10 ansible_username=kubeadmin ansible_ssh_private_key_file=/home/kubeadmin/.ssh/id_rsa

master2 ansible_host=192.168.166.11 ansible_username=kubeadmin ansible_ssh_private_key_file=/home/kubeadmin/.ssh/id_rsa

worker-1 ansible_host=192.168.166.7 ansible_username=kubeadmin ansible_ssh_private_key_file=/home/kubeadmin/.ssh/id_rsa

worker-2 ansible_host=192.168.166.8 ansible_username=kubeadmin ansible_ssh_private_key_file=/home/kubeadmin/.ssh/id_rsa

[lb]

haproxy

[master]

master1

master2

[worker]

worker1

worker2

[k8s]

master1

master2

worker1

worker2

EOF

Now that we have successfully configured the ansible-master(controller), now reboot the VM, since we have made changes to hosts and hostname files. After reboot, you should see the new hostname.

$ sudo reboot

login back and now you will see the new hostname.

Comments

Post a Comment